VPP Containerlab Docker image

User Documentation

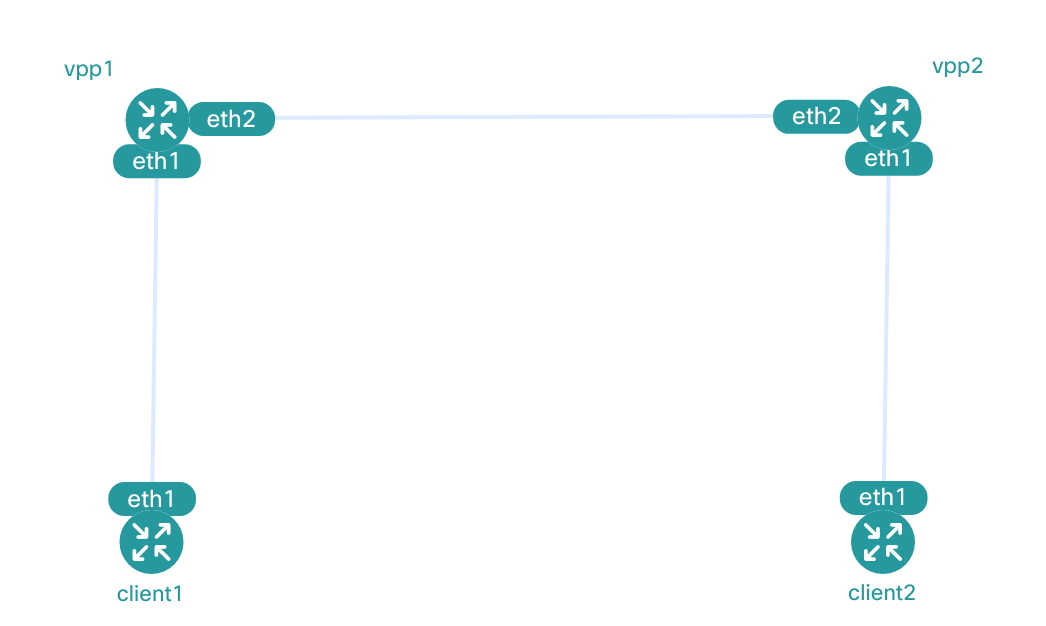

The file vpp.clab.yml contains an example topology existing of two VPP instances connected each to

one Alpine linux container, in the following topology:

You can deploy it using containerlab deploy --topo vpp.clab.yml.

Two relevant files for VPP are included in this repository:

config/vpp*/vppcfg.yamlconfigures the dataplane interfaces, including a loopback address.config/vpp*/bird-local.confconfigures the controlplane to enable BFD and OSPF.

Once the lab comes up, you can SSH to the VPP containers (vpp1 and vpp2) which will have your

SSH keys installed (if available). Otherwise, you can log in as user root using password vpp.

VPP runs its own network namespace called dataplane, which is very similar to SR Linux default

network-instance. You can join it to take a look:

pim@summer:~/src/vpp-containerlab$ ssh root@vpp1

root@vpp1:~# nsenter --net=/var/run/netns/dataplane

root@vpp1:~# ip -br a

lo DOWN

loop0 UP 10.82.98.0/32 2001:db8:8298::/128 fe80::dcad:ff:fe00:0/64

eth1 UNKNOWN 10.82.98.65/28 2001:db8:8298:101::1/64 fe80::a8c1:abff:fe77:acb9/64

eth2 UNKNOWN 10.82.98.16/31 2001:db8:8298:1::1/64 fe80::a8c1:abff:fef0:7125/64

root@vpp1:~# ping 10.82.98.1 ## The vpp2 IPv4 loopback address

PING 10.82.98.1 (10.82.98.1) 56(84) bytes of data.

64 bytes from 10.82.98.1: icmp_seq=1 ttl=64 time=9.53 ms

64 bytes from 10.82.98.1: icmp_seq=2 ttl=64 time=15.9 ms

^C

--- 10.82.98.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 9.530/12.735/15.941/3.205 ms

The two clients are running a minimalistic Alpine Linux container, which doesn't ship with SSH by default. You can enter the containers as following:

pim@summer:~/src/vpp-containerlab$ docker exec -it client1 sh

/ # ip addr show dev eth1

531235: eth1@if531234: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 9500 qdisc noqueue state UP

link/ether 00:c1:ab:00:00:01 brd ff:ff:ff:ff:ff:ff

inet 10.82.98.66/28 scope global eth1

valid_lft forever preferred_lft forever

inet6 2001:db8:8298:101::2/64 scope global

valid_lft forever preferred_lft forever

inet6 fe80::2c1:abff:fe00:1/64 scope link

valid_lft forever preferred_lft forever

/ # traceroute 10.82.98.82

traceroute to 10.82.98.82 (10.82.98.82), 30 hops max, 46 byte packets

1 10.82.98.65 (10.82.98.65) 5.906 ms 7.086 ms 7.868 ms

2 10.82.98.17 (10.82.98.17) 24.007 ms 23.349 ms 15.933 ms

3 10.82.98.82 (10.82.98.82) 39.978 ms 31.127 ms 31.854 ms

/ # traceroute 2001:db8:8298:102::2

traceroute to 2001:db8:8298:102::2 (2001:db8:8298:102::2), 30 hops max, 72 byte packets

1 2001:db8:8298:101::1 (2001:db8:8298:101::1) 0.701 ms 7.144 ms 7.900 ms

2 2001:db8:8298:1::2 (2001:db8:8298:1::2) 23.909 ms 22.943 ms 23.893 ms

3 2001:db8:8298:102::2 (2001:db8:8298:102::2) 31.964 ms 30.814 ms 32.000 ms

From the vantage point of client1, the first hop represents the vpp1 node, which forwards to

vpp2, which finally forwards to client2.

Developer Documentation

This docker container creates a VPP instance based on the latest VPP release. It starts up as per

normal, using /etc/vpp/startup.conf (which Containerlab might replace when it starts its

containers). Once started, it'll execute /etc/vpp/bootstrap.vpp within the dataplane. There are

two relevant files:

clab.vpp-- generated byfiles/init-container.sh. Its purpose is to bind thevethinterfaces that containerlab has added to the container into the VPP dataplane (see below).vppcfg.vpp-- generated byfiles/init-container.sh. Its purpose is to read the user specifiedvppcfg.yamlfile and convert it into VPP CLI commands. If no YAML file is specified, or if it is not syntactically valid, an empty file is generated instead.

For Containerlab users who wish to have more control over their VPP bootstrap, it's possible to

bind-mount /etc/vpp/bootstrap.vpp.

Building

IMG=git.ipng.ch/ipng/vpp-containerlab

TAG=latest

docker build --no-cache -f docker/Dockerfile.bookworm -t $IMG docker/

docker image tag $IMG $IMG:$TAG

docker push $IMG

docker push $IMG:$TAG

Testing standalone container

docker network create --driver=bridge clab-network --subnet=192.0.2.0/24 \

--ipv6 --subnet=2001:db8::/64

docker rm clab-pim

docker run --cap-add=NET_ADMIN --cap-add=SYS_NICE --cap-add=SYS_PTRACE \

--device=/dev/net/tun:/dev/net/tun \

--device=/dev/vhost-net:/dev/vhost-net \

--privileged --name clab-pim \

docker.io/pimvanpelt/vpp-containerlab:latest

docker network connect clab-network clab-pim

A note on DPDK

DPDK will be disabled by default as it requires hugepages and VFIO and/or UIO to use physical network cards. If DPDK at some future point is desired, mapping VFIO can be done by adding this:

--device=/dev/vfio/vfio:/dev/vfio/vfio

or in Containerlab, using the devices feature:

my-node:

image: git.ipng.ch/ipng/vpp-containerlab:latest

kind: fdio_vpp

devices:

- /dev/vfio/vfio

- /dev/net/tun

- /dev/vhost-net

If using DPDK in a container, one of the userspace IO kernel drivers must be loaded in the host

kernel. Options are igb_uio, vfio_pci, or uio_pci_generic:

$ sudo modprobe igb_uio

$ sudo modprobe vfio_pci

$ sudo modprobe uio_pci_generic

Particularly the VFIO driver needs to be present before one can attempt to bindmount

/dev/vfio/vfio into the container!

Configuring VPP

When Containerlab starts the docker containers, it'll offer one or more veth point to point

network links, which will show up as eth1 and further. eth0 is the default NIC that belongs to

the management plane in Containerlab (the one which you'll see with containerlab inspect). Before

VPP can use these veth interfaces, it needs to bind them, like so:

docker exec -it clab-pim vppctl

and then within the VPP control shell:

create host-interface v2 name eth1

set interface name host-eth1 eth1

set interface mtu 1500 eth1

set interface ip address eth1 192.0.2.2/24

set interface ip address eth1 2001:db8::2/64

set interface state eth1 up

Containerlab will attach these veth pairs to the container, and replace our Docker CMD with one

that waits for all of these interfaces to be added (typically called if-wait.sh). In our own CMD,

we then generate a config file called /etc/vpp/clab.vpp which contains the necessary VPP commands

to take control over these veth pairs.

In addition, you can add more commands that'll execute on startup by copying in

/etc/vpp/manual-pre.vpp (to be executed before the containerlab stuff) or

/etc/vpp/manual-post.vpp (to be executed after the containerlab stuff).